To Tame Inflation, Talk Isn’t Enough

Central bankers’ proclamations have little effect on consumers—but rate hikes matter more.

To Tame Inflation, Talk Isn’t Enough

There is no single perfect model for forecasting GDP, or a company’s earnings. A model may work well sometimes, but other times be wildly inaccurate, like during a financial crisis, when changing interest rates and government actions can alter the economic landscape.

According to University of Heidelberg postdoctoral scholar Stefan Richter and Chicago Booth’s Ekaterina Smetanina, testing for the best model assumes there actually is an always-best model. They’ve put together a framework for analyzing how forecasting models perform as conditions change, which allows for the possibility that the performance of a model, even the best ones, will change over time.

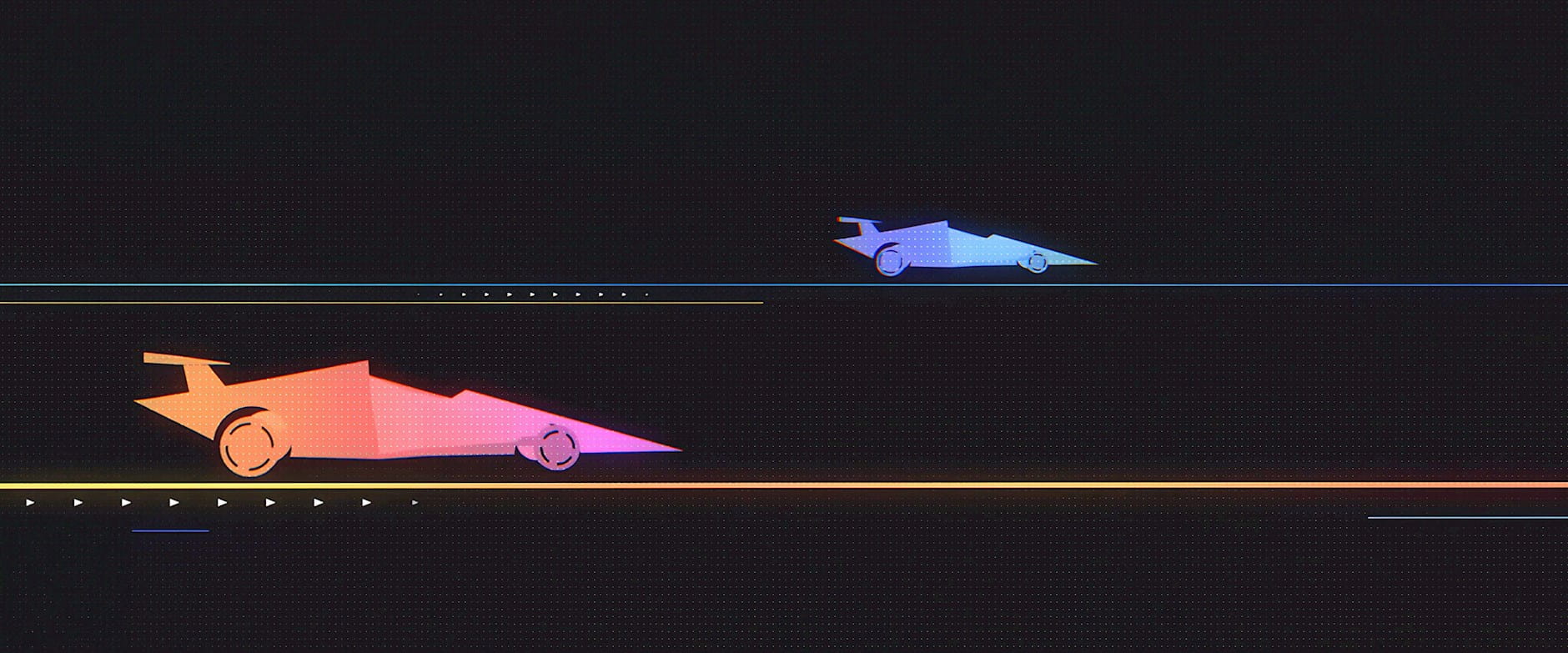

Imagine that two race cars, each representing a forecasting model, are set loose on a track. The length of that track represents the data that are available. If the track were infinitely long and perfectly uniform, the winner would ultimately become very clear. But when you’re talking about forecasting something using real data, such as quarterly GDP figures, the data are limited in length and the model’s performance may change as the financial and economic landscape changes.

We need to decide how much data to use for estimation, how much room those cars have to reach their top speeds, what obstacles they encounter that may cause them to overtake each other a lot. Then the real question is: How do we determine which car is fastest overall?

The method used by most researchers is to take much of the historical data available to build parameters for the model and to verify the model using a smaller, more recent data set. This involves creating a splitting point between those two data sets in the hope that there is enough data to build the model and to evaluate it. But this leaves the decision of where to make the split to researchers with little formal methodology to guide them, and their choice is crucial to the end result.

Richter and Smetanina propose a more robust method-selection process that avoids this splitting-point problem and instead examines each model’s performance against the others’ as a function of time across several conditions, much like comparing the speed of those two race cars over all possible terrains and not just two rigid choices.

The researchers created two methods to compare a forecast’s performance. One aggregates past performance to measure how it’s performed historically, and another forecasts which model is most likely to outperform in the future. The researchers suggest that with these two perspectives, economic forecasts may be better equipped to go the distance because a model that performed well in the past won’t necessarily continue doing so, and models that didn’t work well before could do so in the future.

Central bankers’ proclamations have little effect on consumers—but rate hikes matter more.

To Tame Inflation, Talk Isn’t Enough

While consumers are a little hazy about overall inflation, asking them about prices for individual categories yields more realistic forecasts.

People Can Forecast Price Rises—If Asked the Right Questions

Chicago Booth’s Raghuram G. Rajan explains why India’s strengths play to services-based development.

Capitalisn’t: Raghuram Rajan’s Vision of an Indian Path to DevelopmentYour Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.